Problematic content

Over the past few years, there has been much discussion about “fake news” and the spread of “problematically inaccurate information” (Jack 1) online and in the media. The difference in problematic content lies in its intent.

Misinformation is information that is incorrect or inaccurate but unintentionally so (2).

Disinformation is purposeful in its inaccuracy and is often created to deceive or cause confusion (3).

However, regardless of intent, dis- or misinformation is problematic because it has the potential to cause physical, emotional, political, and financial harm to individuals, communities, and society. This was witnessed most recently (at the time of this writing) with the 2020 elections and the COVID-19 pandemic.

Over and over and over...

When a piece of information is frequently and consistently repeated, the more people tend to believe it; this is the case whether someone is hearing dis- or misinformation that is clearly false, or if they are hearing news that is a denial or a debunking of dis- or misinformation (Clark; Illling; Lewandowsky; Nyhan and Reifler). For instance, repeated exposure to misinformation about COVID-19 led to people drinking bleach in an effort to kill the virus, or demanding prescriptions for hydroxychloroquine from their doctors (Satariano). Those who stormed the Capitol on January 6, 2021 did so because they believed that there was widespread voter fraud during the 2020 election cycle, that Donald Trump had truly won the presidential vote, and that democracy was under attack (Bond; Rash); they believed this because these “continuous assertions” (Rash) were repeated frequently by then-President Trump and his followers.

When people hear repeated information, it gets stuck in their minds, and it then becomes difficult for them to believe the truth when confronted with it. This is not limited to social media, and it is not a new phenomena; in the 1940s, it took an investigation by the FBI to formally disprove the existence of secret grassroot “Eleanor Clubs” that were purported to have organized Black women, yet many newspapers refused to believe it or publish retractions (Zeitz).

Nyhan and Reifler recommend that journalists “get the story right the first time” and that if corrections are needed, to publish them as soon as possible, to avoid negations, and to keep repetition to a minimum.

Read it, believe it, share it

Although misinformation is a problem on social media platforms, the number of people sharing dis- and misinformation and “fake news” is not as high as formerly suspected, and the small percentage of people sharing accounts for the greatest percentage of misinformation (Altay et al.; Guess et al.). Altay et al. asserts that people hope to maintain their reputations, and spreading false information could damage their credibility, especially as trust is slow to earn, but quick to lose (3). However, things get a little more complicated when the intent is not to trick or fool anyone, and social media users are taking in by convincing content.

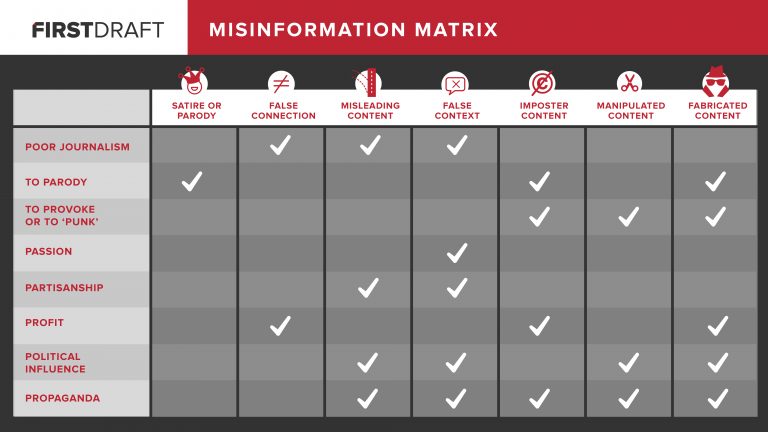

| Type of Motivation | Satire or Parody | False Connection | Misleading Content | False Context | Imposter Content | Manipulated Content | Fabricated Content |

|---|---|---|---|---|---|---|---|

| Poor Journalism | no | yes | yes | yes | no | no | no |

| To Parody | yes | no | no | no | yes | no | yes |

| To Provoke or to Punk | no | no | no | no | yes | yes | yes |

| Passion | no | no | no | yes | no | no | no |

| Partisanship | no | no | yes | yes | no | no | no |

| Profit | no | yes | no | no | yes | no | yes |

| Political Influence | no | no | yes | yes | no | yes | yes |

| Propaganda | no | no | yes | yes | yes | yes | yes |

Satire or parody is on the low end of the intent-to-deceive scale, with humor as its main motivation to spoof or mock its target, although it still may have potential to deceive (Wardle). While Allcott and Gentzkow rule out satire in their definition of “fake news” (214), a study from Garrett et al. found that some people cannot differentiate between news stories and satirical posts, using articles from The Babylon Bee and The Onion, two popular satire websites. One of their suggestions is to clearly label these articles as satire when they are posted to social media or pulled up in search results, although Scott Dikkers, founder of The Onion, and many long-time readers of The Onion take offense that people cannot tell the difference between real news, “fake news”, and the stories posted on satirical websites (Hutchison).

Further, even Facebook’s own moderators and algorithms can sometimes get it wrong. A “honeypot” group called Vaccines Exposed, whose members debated anti-vaxxers in an effort to change their minds, was removed from the platform in January 2021 (Rogers). Matt Saincome, co-founder of The Hard Times, a satirical music website, posted on Twitter in March 2021 asking for help in appealing Facebook’s decision to remove some of their posts for violating community standards and to potentially remove their page from the platform completely.

Wading through all the muck

As recently as March 2021 (at the time of this writing), heads of major social media and tech companies testified about misinformation, its proliferation on their platforms, and the possible solutions for mitigating its spread (Bond).

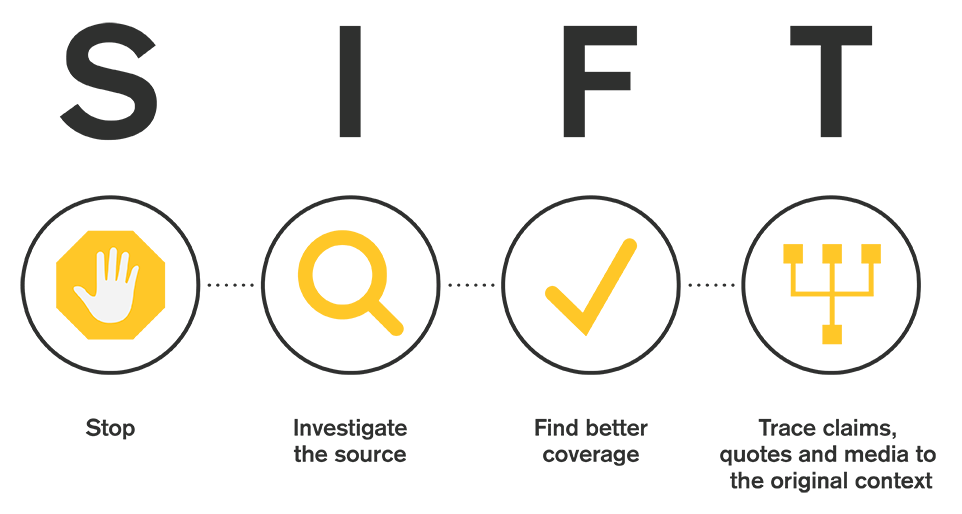

It’s important to attack the problem on a systemic level and to focus on how misinformation is spread, and to not blame any specific individuals, which can intensify stigma, further isolate communities, and deepen susceptibility for believing misinformation (Gynes and Mina). Having research, citation, and media literacy skills are helpful, but they are the first step, not the only step (boyd). Caulfield proposes a short list of “things to do” when examining a source (“SIFT”):

These moves come at a time when new techniques are needed for research and analysis. While older techniques do have their place, they do not take into account the changes in today’s modern technologies, such as the massive amount of information available on the internet, including the validity of websites like Wikipedia (boyd; Caulfield, “Yes, Digital Literacy”; Caulfield, “SIFT”). In addition to understanding how to check the currency, authority, purpose, or bias of a source, people also need the skills in how to catch other clues, such as political bias, white supremecist ideology, or straight up manufactured details (Caulfield, “Yes, Digital Literacy). As mentioned above, once someone knows that The Onion publishes satirical news, they are at a very low risk for spreading that information as if it were real.

Responsible solutions for everyone

Many social media companies already have policies in place to deal with misinformation on their platforms; some of these practices include labeling content as misleading or disputed, removing content, banning users, adding prompts before sharing, and allowing users to report content or other users (Mozilla Foundation). However, many political figures contend that this is not enough. This past March (at the time of this writing), heads of major social media and tech companies testified via video before Congress about misinformation, its proliferation on their platforms, and the possible solutions for mitigating its spread (Bond). Several representatives have called to reform or even repeal Section 230 of the Communications Decency Act, which states:

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

(United States Code)

With Section 230, social media companies are free from liability for what their users post on their platforms, but if they do not address the systemic issues of dis- and misinformation (including hate speech) on their platforms, it sends the message that targeted groups are “second class citizens” (Citron and Norton, qtd. in Piernock 9). Yet, repealing Section 230 completely could have “unintended consequences” (Pichai qtd. in Bond) as some sites may over-moderate their content, or some may not be able to host user-generated content at all due to the burden of moderation. On the other hand, these platforms can build content moderation guidelines, using Section 230 as a tool and Lessig’s four forces of regulation to help mitigate misinformation spread (Piernock 12). Some examples may include:

- platforms providing clearer methods for users to report or assert that content is misinformation or is unacceptable;

- platforms and users debunking misinformation when possible;

- platforms incorporating overlays or labels to misleading or deceptive content; and

- platforms providing greater transparency in moderation guidelines.

Saltz et al. provide multiple design techniques for labeling misinformation on social media platforms. Based on solid usability and user experience principles, these techniques could promote digital literacy by clearly labeling content and also presenting new approaches to deciphering the various clues of misinformation.

And in conclusion

Misinformation and “fake news” has been around as long as real news itself, maybe even longer (Soll), and its scope is not limited to social media and the internet. Nevertheless, a new type of digital and media literacy is required for future progress, and skills in analyzing content need to keep up with the fast-paced advances in technology. Combining the techniques proposed by Caulfield, the principles recommended by Saltz et al., and changes in platform moderation may flatten the curve of this “misinfodemic” (Gyenes and Mina).